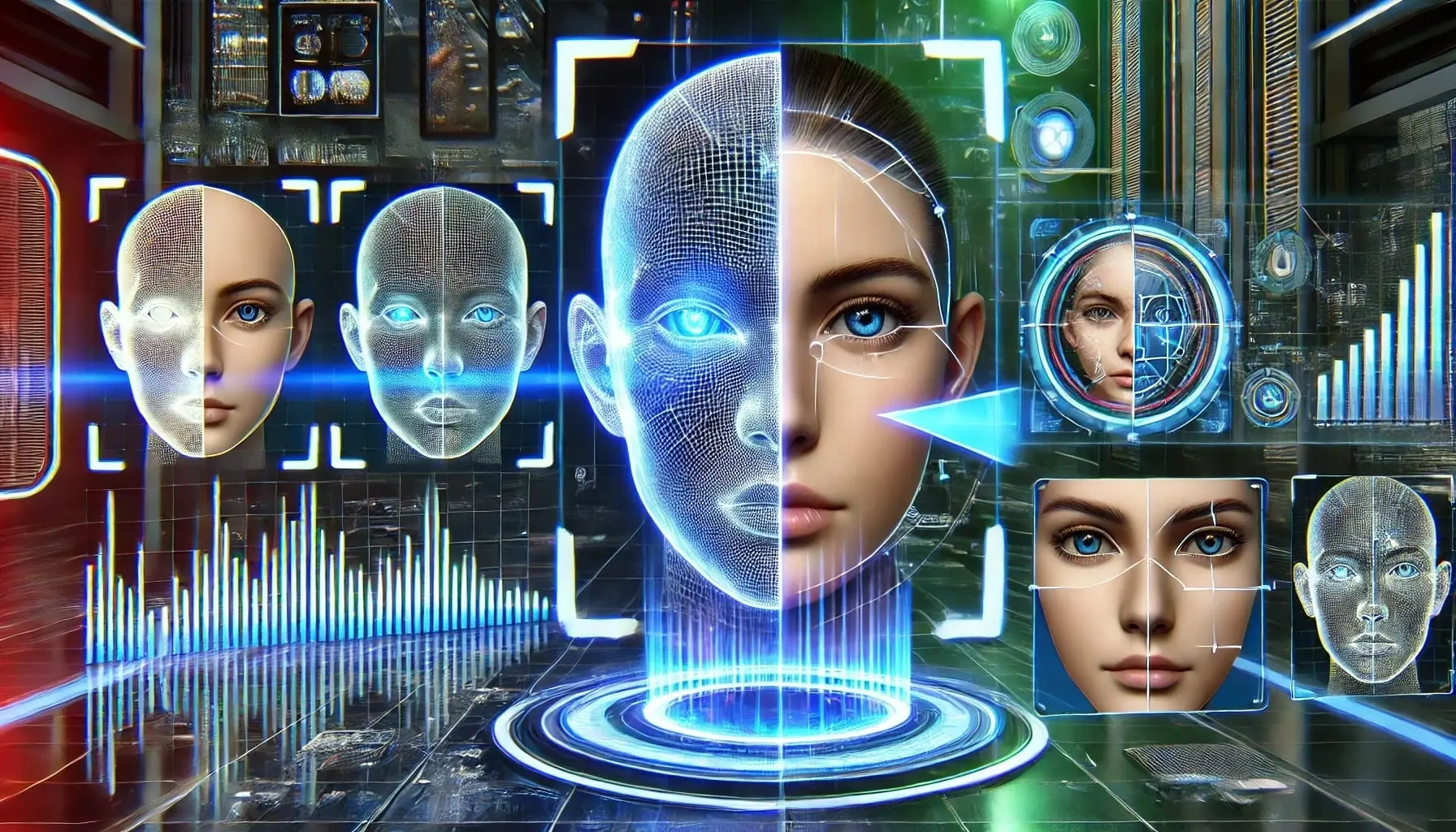

Deepfake Fraud Is Getting Smarter And Businesses Are the Target

Not long ago, deepfakes were mostly associated with viral videos and internet pranks. Today, they’re something far more serious. By 2026, deepfake technology has become a practical tool for fraud, manipulation, and large scale cybercrime and businesses are right in the crosshairs.

The most unsettling part isn’t just how realistic deepfakes have become. It’s how effectively they exploit trust. When a voice sounds like your CEO or a video call looks perfectly legitimate, instinct often overrides caution. Attackers know this, and they’re using it to bypass traditional security measures with alarming success.

Why Deepfakes Work So Well in Corporate Environments

Most enterprise security systems are built to verify credentials, devices, and networks. They aren’t designed to question whether a person on the other end of a call is real.

Deepfakes exploit that gap. They target human decision making rather than technical defenses. Finance teams receive urgent voice messages authorizing transfers. HR teams interview candidates who don’t exist. Legal departments are pressured into sharing documents during convincing video calls.

These attacks don’t rely on malware or brute force. They rely on believability.

The AI Arms Race Behind Deepfake Fraud

The same advances driving innovation in AI security are also fueling more sophisticated attacks. Generative models can now replicate tone, facial expressions, and speech patterns with minimal training data. A few minutes of audio or video is often enough.

At the same time, deepfake detection has become a complex challenge. Simple visual cues no longer work. Lighting, lip movement, and audio synchronization are improving faster than many organizations can adapt.

This cat and mouse dynamic is one of the most important cybersecurity trends shaping 2026.

Financial Loss Is Only Part of the Damage

When deepfake fraud succeeds, the immediate financial impact can be severe. But the long term damage often runs deeper.

Trust erodes. Employees second guess leadership communications. Customers question authenticity. Partners worry about the integrity of interactions. Once confidence is shaken, it’s difficult to rebuild.

This makes deepfake attacks particularly dangerous for enterprises that rely on rapid decision making, remote collaboration, and digital communication.

Deepfakes and the Data Breach Risk

Deepfakes are often used as the entry point for larger attacks. Once an attacker gains trust, they can extract sensitive data, credentials, or internal access details.

In this way, deepfake fraud connects directly to data breach prevention challenges. A convincing impersonation can bypass controls that would normally stop unauthorized access.

It’s not unusual for deepfake based social engineering to lead to ransomware protection failures, especially when attackers gain enough information to move laterally within a network.

Why Traditional Training Isn’t Enough

Many organizations respond to deepfake threats by updating employee training. While awareness is important, it has limits.

Humans are not reliable detection systems especially under pressure. Attackers intentionally create urgency to short circuit rational thinking. No amount of training can guarantee that someone won’t make a mistake when faced with a perfectly realistic voice or video.

This is why deepfake detection needs to be supported by technology, not just policy.

Making Deepfake Detection Part of Enterprise Security

Modern enterprise security strategies treat deepfake detection as a layered defense. This includes:

- Verifying high risk requests through secondary channels

- Monitoring anomalies in communication patterns

- Using AI tools trained to identify synthetic media

- Limiting decision authority based on context and risk

The goal isn’t to create friction everywhere, but to slow things down just enough when something feels off.

As AI agents take on more responsibility within organizations, AI agent security also becomes critical. Autonomous systems must be able to distinguish between legitimate instructions and manipulated inputs or escalate decisions for human review.

Preparing for a Future Where Reality Is Flexible

Deepfakes are not a temporary trend. As AI improves, synthetic media will become cheaper, faster, and harder to detect. The question isn’t whether your organization will encounter deepfake attempts it’s whether you’ll recognize them in time.

Forward looking companies are already adjusting their cybersecurity posture, treating deepfake detection as a core capability rather than a niche concern.

Platforms like Hexon.bot help organizations analyze emerging AI security risks, strengthen verification processes, and align defenses with real world threat behavior without disrupting day to day operations.

Trust, Rebuilt for the AI Era

In a world where seeing and hearing are no longer proof, trust must be designed into systems, not assumed. Deepfake fraud challenges some of the most basic assumptions businesses make about communication.

Those who adapt early combining human judgment with AI driven security will be better prepared for what comes next. In 2026, protecting trust is just as important as protecting data.