Gradients and Why Optimization Depends on Them

Gradients are one of the most important ideas in data science and machine learning. They help models understand how to improve step by step. A gradient shows the direction and rate of change of a function. In simple terms, it tells a model which way to move to reduce errors. Without gradients, optimization would be blind and inefficient.

Every modern learning algorithm relies on this concept to improve performance. If you are beginning your learning journey and want to understand these foundations deeply, join Data Science Courses in Bangalore at FITA Academy to build strong conceptual clarity along with practical exposure.

Understanding Optimization in Machine Learning

Optimization is the process of making a model perform better by reducing its error. This error is measured using a loss function. The goal of optimization is to find parameter values that minimize this loss. Models do not magically know the best parameters.

They improve through repeated adjustments. Each adjustment is guided by information from gradients. Optimization without gradients would rely on guessing. This would be slow and unreliable. Gradients provide a structured way to move toward better solutions.

How Gradients Guide Learning

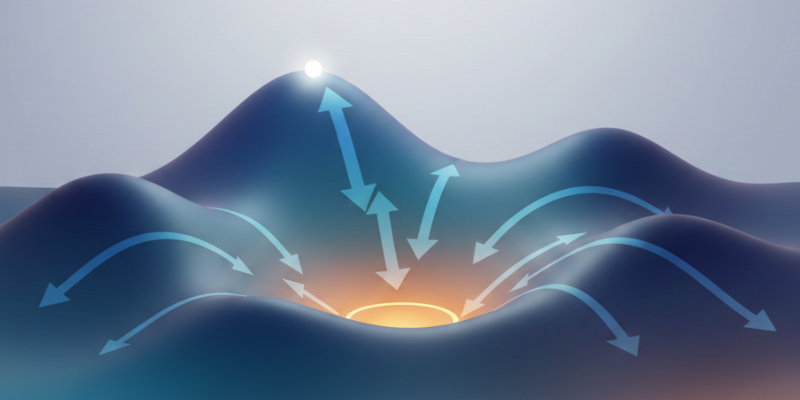

Gradients act like a compass for learning algorithms. They indicate how much a small change in a parameter will affect the loss. If the gradient is large, the model needs a stronger correction. If the gradient is small, only minor changes are needed.

This feedback loop helps models learn efficiently. Gradient based methods update parameters in small steps. These steps are carefully chosen to avoid instability. Over time, the model reaches a point where improvements become minimal. This point is often called an optimum.

Gradient Descent Explained Simply

The most used optimization method is gradient descent. It works by moving parameters in the opposite direction of the gradient. This direction leads to lower error. Each iteration brings the model closer to an optimal solution. The learning rate controls how big each step is. A very large step can overshoot the solution.

A very small step can slow learning. Understanding this balance is essential for building effective models. Learners who want hands-on practice with these ideas can consider taking a Data Science Course in Hyderabad to apply theory through guided projects and real datasets.

Why Gradients Are Essential for Deep Learning

Deep learning models contain many layers and parameters. Manual tuning is impossible at this scale. Gradients make automated learning feasible. They allow error signals to flow backward through layers. This process helps each layer adjust correctly.

Without gradients, deep networks would not learn meaningful patterns. Image recognition, speech processing, and recommendation systems all depend on gradient based optimization. This is why gradients are often described as the engine behind intelligent systems.

Common Challenges with Gradients

Gradients are powerful but not perfect. Sometimes gradients become too small and learning slows down. This is known as the vanishing gradient problem. In other cases, gradients grow too large and cause instability. This is called exploding gradients.

Researchers address these issues using better architectures and optimization strategies. Understanding these challenges helps practitioners design more reliable models. It also improves troubleshooting when models fail to converge.

Gradients are the backbone of optimization in data science and machine learning. They provide direction, efficiency, and structure to the learning process. Without them, modern algorithms would not exist.

A strong grasp of gradients helps you understand how models learn and improve. If you want to master these fundamentals and apply them confidently, consider enrolling in a Data Science Course in Ahmedabad to strengthen your skills and advance your career with practical learning support.

Also check: The Role of Storytelling in Data Visualization